JavaScript objects can have arbitrary properties associated with them. The names of object properties can contain any character. One of the interesting cases that a JavaScript engine can choose to optimize for are properties whose names are purely numeric, most specifically array indices.

In V8, properties with integer names — the most common form of which are objects generated by the Array constructor — are handled specially. Although in many circumstances these numerically-indexed properties behave just like other properties, V8 chooses to store them separately from non-numeric properties for optimization purposes. Internally, V8 even gives these properties a special name: elements. Objects have properties that map to values, whereas arrays have indices that map to elements.

Although these internals are never directly exposed to JavaScript developers, they explain why certain code patterns are faster than others.

Common elements kinds

While running JavaScript code, V8 keeps track of what kind of elements each array contains. This information allows V8 to optimize any operations on the array specifically for this type of element. For example, when you call reduce, map, or forEach on an array, V8 can optimize those operations based on what kind of elements the array contains.

Take this array, for example:

const array = [1, 2, 3];

What kinds of elements does it contain? If you’d ask the typeof operator, it would tell you the array contains numbers. At the language-level, that’s all you get: JavaScript doesn’t distinguish between integers, floats, and doubles — they’re all just numbers. However, at the engine level, we can make more precise distinctions. The elements kind for this array is PACKED_SMI_ELEMENTS. In V8, the term Smi refers to the particular format used to store small integers. (We’ll get to the PACKED part in a minute.)

Later adding a floating-point number to the same array transitions it to a more generic elements kind:

const array = [1, 2, 3];

array.push(4.56);

Adding a string literal to the array changes its elements kind once again.

const array = [1, 2, 3];

array.push(4.56);

array.push('x');

We’ve seen three distinct elements kinds so far, with the following basic types:

- Small integers, also known as Smi.

- Doubles, for floating-point numbers and integers that cannot be represented as a Smi.

- Regular elements, for values that cannot be represented as Smi or doubles.

Note that doubles form a more general variant of Smi, and regular elements are another generalization on top of doubles. The set of numbers that can be represented as a Smi is a subset of the numbers that can be represented as a double.

What’s important here is that elements kind transitions only go in one direction: from specific (e.g. PACKED_SMI_ELEMENTS) to more general (e.g. PACKED_ELEMENTS). Once an array is marked as PACKED_ELEMENTS, it cannot go back to PACKED_DOUBLE_ELEMENTS, for example.

So far, we’ve learned the following:

- V8 assigns an elements kind to each array.

- The elements kind of an array is not set in stone — it can change at runtime. In the earlier example, we transitioned from

PACKED_SMI_ELEMENTS to PACKED_ELEMENTS. - Elements kind transitions can only go from specific kinds to more general kinds.

PACKED vs. HOLEY kinds

So far, we’ve only been dealing with dense or packed arrays. Creating holes in the array (i.e. making the array sparse) downgrades the elements kind to its “holey” variant:

const array = [1, 2, 3, 4.56, 'x'];

array.length;

array[9] = 1;

V8 makes this distinction because operations on packed arrays can be optimized more aggressively than operations on holey arrays. For packed arrays, most operations can be performed efficiently. In comparison, operations on holey arrays require additional checks and expensive lookups on the prototype chain.

Each of the basic elements kinds we’ve seen so far (i.e. Smis, doubles, and regular elements) comes in two flavors: the packed and the holey version. Not only can we transition from, say, PACKED_SMI_ELEMENTS to PACKED_DOUBLE_ELEMENTS, we can also transition from any PACKED kind to its HOLEY counterpart.

To recap:

- The most common elements kinds come in

PACKED and HOLEY flavors. - Operations on packed arrays are more efficient than operations on holey arrays.

- Elements kinds can transition from

PACKED to HOLEY flavors.

The elements kind lattice

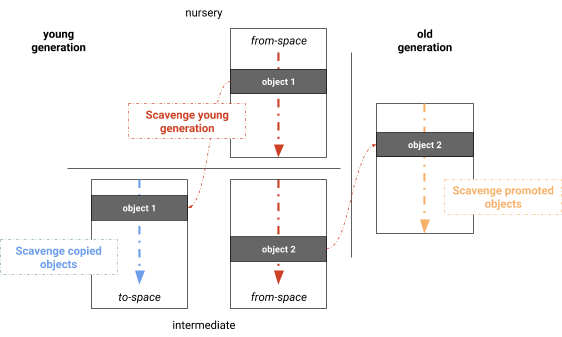

V8 implements this tag transitioning system as a lattice. Here’s a simplified visualization of that featuring only the most common elements kinds:

![]()

It’s only possible to transition downwards through the lattice. Once a single floating-point number is added to an array of Smis, it is marked as DOUBLE, even if you later overwrite the float with a Smi. Similarly, once a hole is created in an array, it’s marked as holey forever, even when you fill it later.

V8 currently distinguishes 21 different elements kinds, each of which comes with its own set of possible optimizations.

In general, more specific elements kinds enable more fine-grained optimizations. The further down the elements kind is in the lattice, the slower manipulations of that object might be. For optimal performance, avoid needlessly transitioning to less specific types — stick to the most specific one that’s applicable to your situation.

Performance tips

In most cases, elements kind tracking works invisibly under the hood and you don’t need to worry about it. But here are a few things you can do to get the greatest possible benefit from the system.

Avoid creating holes

Let’s say we’re trying to create an array, for example:

const array = newArray(3);

array[0] = 'a';

array[1] = 'b';

array[2] = 'c';

Once the array is marked as holey, it’s holey forever — even if it’s packed later! Any operation on the array from then on is potentially slower than it could be. If you plan on performing lots of operations on the array, and you’d like to optimize those operations, avoid creating holes in the array. V8 can deal with packed arrays more efficiently.

A better way of creating an array is to use a literal instead:

const array = ['a', 'b', 'c'];

If you don’t know all the values ahead of time, create an array, and later push the values to it.

const array = [];

array.push(someValue);

array.push(someOtherValue);

This approach ensures that the array never transitions to a holey elements kind. As a result, V8 can optimize any future operations on the array more efficiently.

Avoid reading beyond the length of the array

A similar situation to hitting a hole occurs when reading beyond the length of the array, e.g. reading array[42] when array.length === 5. In this case, the array index 42 is out of bounds, the property is not present on the array itself, and so the JavaScript engine has to perform the same expensive prototype chain lookups.

Don’t write your loops like this:

for (let i = 0, item; (item = items[i]) != null; i++) {

doSomething(item);

}

This code reads all the elements in the array, and then one more. It only ends once it finds an undefined or null element. (jQuery uses this pattern in a few places.)

Instead, write your loops the old-fashioned way, and just keep iterating until you hit the last element.

for (let index = 0; index < items.length; index++) {

const item = items[index];

doSomething(item);

}

When the collection you’re looping over is iterable (as is the case for arrays and NodeLists), that’s even better: just use for-of.

for (const item of items) {

doSomething(item);

}

For arrays specifically, you could use the forEach built-in:

items.forEach((item) => {

doSomething(item);

});

Nowadays, the performance of both for-of and forEach is on par with the old-fashioned for loop.

Avoid reading beyond the array’s length! Doing so is just as bad as hitting a hole in an array. In this case, V8’s bounds check fails, the check to see if the property is present fails, and then we need to look up the prototype chain.

Avoid elements kind transitions

In general, if you need to perform lots of operations on an array, try sticking to an elements kind that’s as specific as possible, so that V8 can optimize those operations as much as possible.

This is harder than it seems. For example, just adding -0 to an array of small integers is enough to transition it to PACKED_DOUBLE_ELEMENTS.

const array = [3, 2, 1, +0];

array.push(-0);

As a result, any future operations on this array are optimized in a completely different way than they would be for Smis.

Avoid -0, unless you explicitly need to differentiate -0 and +0 in your code. (You probably don’t.)

The same thing goes for NaN and Infinity. They are represented as doubles, so adding a single NaN or Infinity to an array of SMI_ELEMENTS transitions it to DOUBLE_ELEMENTS.

const array = [3, 2, 1];

array.push(NaN, Infinity);

If you’re planning on performing lots of operations on an array of integers, consider normalizing -0 and blocking NaN and Infinity when initializing the values. That way, the array sticks to the PACKED_SMI_ELEMENTS kind. This one-time normalization cost can be worth the later optimizations.

In fact, if you’re doing mathematical operations on an array of numbers, consider using a TypedArray. We have specialized elements kinds for those, too.

Prefer arrays over array-like objects

Some objects in JavaScript — especially in the DOM — look like arrays although they aren’t proper arrays. It’s possible to create array-like objects yourself:

const arrayLike = {};

arrayLike[0] = 'a';

arrayLike[1] = 'b';

arrayLike[2] = 'c';

arrayLike.length = 3;

This object has a length and supports indexed element access (just like an array!) but it lacks array methods such as forEach on its prototype. It’s still possible to call array generics on it, though:

Array.prototype.forEach.call(arrayLike, (value, index) => {

console.log(`${ index }: ${ value }`);

});

This code calls the Array.prototype.forEach built-in on the array-like object, and it works as expected. However, this is slower than calling forEach on a proper array, which is highly optimized in V8. If you plan on using array built-ins on this object more than once, consider turning it into an actual array beforehand:

const actualArray = Array.prototype.slice.call(arrayLike, 0);

actualArray.forEach((value, index) => {

console.log(`${ index }: ${ value }`);

});

The one-time conversion cost can be worth the later optimizations, especially if you plan on performing lots of operations on the array.

The arguments object, for example, is an array-like object. It’s possible to call array builtins on it, but such operations won’t be fully optimized the way they could be for a proper array.

const logArgs = function() {

Array.prototype.forEach.call(arguments, (value, index) => {

console.log(`${ index }: ${ value }`);

});

};

logArgs('a', 'b', 'c');

ES2015 rest parameters can help here. They produce proper arrays that can be used instead of the array-like arguments objects in an elegant way.

const logArgs = (...args) => {

args.forEach((value, index) => {

console.log(`${ index }: ${ value }`);

});

};

logArgs('a', 'b', 'c');

Nowadays, there’s no good reason to use the arguments object directly.

In general, avoid array-like objects whenever possible and use proper arrays instead.

Avoid polymorphism

If you have code that handles arrays of many different elements kinds, it can lead to polymorphic operations that are slower than a version of the code that only operates on a single elements kind.

Consider the following example, where a library function is called with various elements kinds. (Note that this is not the native Array.prototype.forEach, which has its own set of optimizations on top of the elements kinds-specific optimizations discussed in this article.)

const each = (array, callback) => {

for (let index = 0; index < array.length; ++index) {

const item = array[index];

callback(item);

}

};

const doSomething = (item) =>console.log(item);

each([], () => {});

each(['a', 'b', 'c'], doSomething);

each([1.1, 2.2, 3.3], doSomething);

each([1, 2, 3], doSomething);

Built-in methods (such as Array.prototype.forEach) can deal with this kind of polymorphism much more efficiently, so consider using them instead of userland library functions in performance-sensitive situations.

Another example of monomorphism vs. polymorphism in V8 involves object shapes, also known as the hidden class of an object. To learn about that case, check out Vyacheslav’s article.

Debugging elements kinds

To figure out a given object’s “elements kind”, get a debug build of d8 (see “Building from source”), and run:

$ out.gn/x64.debug/d8 --allow-natives-syntax

This opens a d8 REPL in which special functions such as %DebugPrint(object) are available. The “elements” field in its output reveals the “elements kind” of any object you pass to it.

d8> const array = [1, 2, 3]; %DebugPrint(array);

DebugPrint: 0x1fbbad30fd71: [JSArray]

- map = 0x10a6f8a038b1 [FastProperties]

- prototype = 0x1212bb687ec1

- elements = 0x1fbbad30fd19<FixedArray[3]> [PACKED_SMI_ELEMENTS (COW)]

- length = 3

- properties = 0x219eb0702241<FixedArray[0]> {

#length: 0x219eb0764ac9<AccessorInfo> (const accessor descriptor)

}

- elements= 0x1fbbad30fd19<FixedArray[3]> {

0: 1

1: 2

2: 3

}

[…]

Note that “COW” stands for copy-on-write, which is yet another internal optimization. Don’t worry about that for now — that’s a topic for another blog post!

Another useful flag that’s available in debug builds is --trace-elements-transitions. Enable it to let V8 inform you whenever any elements kind transition takes place.

$ cat my-script.js

const array = [1, 2, 3];

array[3] = 4.56;

$ out.gn/x64.debug/d8 --trace-elements-transitions my-script.js

elements transition [PACKED_SMI_ELEMENTS -> PACKED_DOUBLE_ELEMENTS] in ~+34 at x.js:2 for 0x1df87228c911 <JSArray[3]> from 0x1df87228c889 <FixedArray[3]> to 0x1df87228c941 <FixedDoubleArray[22]>